Lecture recording (Feb 20, 2025) here.

Lab recording (Feb 21, 2025) here.

There is a big and understandable expectation that all applications run as fast as possible. When applications run fast, a system can fulfill the business requirements quickly and put it in a position to expand its business and handle future needs as well. A system or a product that is not able to service business transactions due to its slow performance is a big loss for the product organization, its customers, and its customers' customers.

| Performance Testing: | Overview on Performance Testing |

| Performance Testing Tutorial For Beginners | |

| What is Performance Testing? |

Assignment 2 - White Box Testing

Assignment 3 - Performance Testing of a Custom Database

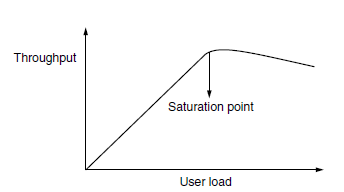

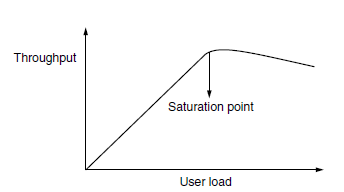

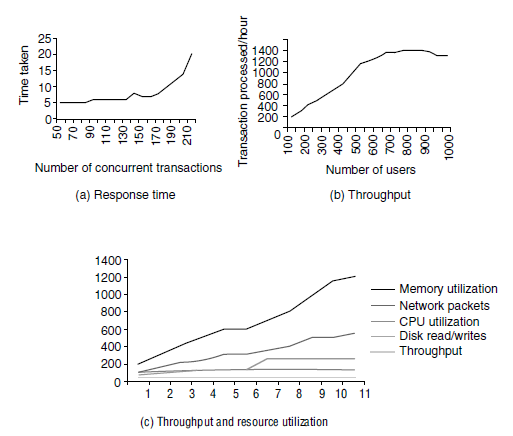

The capability of the system or the product in handling multiple transactions is determined by a factor called throughput.

Throughput represents the number of requests/business transactions processed by the product in a specified time duration. It is

important to understand that the throughput (that is, the number of transactions serviced by the product per unit time) varies

according to the load the product is subjected to. The below figure is an example of the throughput of a system at various load conditions.

The load to the product can be increased by increasing the number of users or by increasing the number of concurrent operations of

the product.

In the above figure, it can be noticed that initially the throughput keeps increasing as the user load increases. This is the ideal situation for any product and indicates that the product is capable of delivering more when there are more users trying to use the product. In the second part of the graph, beyond certain user load conditions (after the bend), it can be noticed that the throughput comes down. This is the period when the users of the system notice a lack of satisfactory response and the system starts taking more time to complete business transactions. The optimum throughput is represented by the saturation point and is the one that represents the maximum throughput for the product.

Throughput represents how many business transactions can be serviced in a given duration for a given load. It is equally important to find out how much time each of the transactions took to complete. Customers might go to a different website or application if a particular request takes more time on this website or application. Hence measuring response time becomes an important activity of performance testing. Response time can be defined as the delay between the point of request and the first response from the product. In a typical client-server environment, throughput represents the number of transactions that can be handled by the server and response time represents the delay between the request and response.

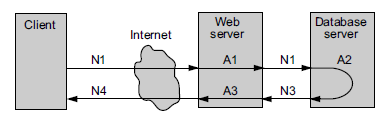

In reality, not all the delay that happens between the request and the response is caused by the product. In the networking scenario,

the network or other products which are sharing the network resources, can cause the delays. Hence, it is important to know what delay

the product causes and what delay the environment causes. This brings up yet another factor for performance - latency. Latency

is a delay caused by the application, operating system, and by the environment that are calculated separately. The below figure shows a

web application providing a service by talking to a web server and a database server connected in the network.

By using the above picture, latency and response time can be calculated as

Network latency = N1 + N2 + N3 + N4

Product latency = A1 + A2 + A3

Actual response time = Network latency + Product latency

The next factor that governs the performance testing is tuning. Tuning is a procedure by which the product performance is enhanced by setting different values to the parameters (variables) of the product, operating system, and other components. Tuning improves the product performance without having to touch the source code of the product. Each product may have certain parameters or variables that can be set a run time to gain optimum performance. The default values that are assumed by such product parameters may not always give optimum performance for a particular deployment. This necessitates the need for changing the values of parameters or variables to suit the deployment or for a particular configuration. Doing performance testing, tuning of parameters is an important activity that needs to be done before collecting actual numbers.

Yet another factor that needs to be considered for performance testing is performance of competitive products. A very well-improved performance of a product makes no business sense if that performance does not match up to the competitive products. Hence it is very important to compare the throughput and response time of the product with those of the competitive products. This type of performance testing wherein competitive products are compared is called benchmarking. No two products are the same in features, cost, and functionality. Hence, it is not easy to decide which parameters must be compared across two products. A careful analysis is needed to chalk out the list of transactions to be compared across products, so that an apples-to-apples comparison becomes possible. This produces meaningful analysis to improve the performance of the product with respect to competition.

One of the most important factors that affect performance testing is the availability of resources. A right kind of configuration (both hardware and software) is needed to derive the best results from performance testing and for deployments. The exercise to find out what resources and configurations are needed is called capacity planning. The purpose of a capacity planning exercise is to help customers plan for the set of hardware and software resources prior to installation or upgrade of the product. This exercise also sets the expectations on what performance the customer will get with the available hardware and software resources.

The testing performed to evaluate the response time, throughput, and utilization of the system, to execute its required functions in comparison with different versions of the same product(s) or a different competitive product(s) is called performance testing. To summarize, performance testing is done to ensure that a product

Performance testing is complex and expensive due to large resource requirements and the time it takes. Hence, it requires careful planning and a robust methodology. A methodology for performance testing involves the following steps.

Collecting Requirements

Collecting requirements is the first step in planning the performance testing. Firstly, a performance testing requirement should be testable - not all features/functionality can be performance tested. Secondly, a performance-testing requirement needs to clearly state what factors needs to be measured and improved. Lastly, performance testing requirement needs to be associated with the actual number or percentage of improvement that is desired. Given these challenges, a key question is how requirements for performance testing can be derived. There are several sources for deriving performance requirements. Some of them are as follows.

There are two types of requirements performance testing focuses on - generic requirements and specific requirements. Generic requirements are those that are common across all products in the product domain area. All products in that area are expected to meet those performance expectations. Specific requirements are those that depend on implementation for a particular product and differ from one product to another in a given domain.

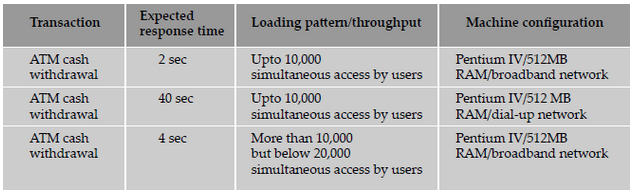

The requirements for performance testing also include the load pattern and resource availability and what is expected from the product under

different load conditions. Hence, while documenting the expected response time, throughput, or any other performance factor, it is equally

important to map different load conditions as illustrated in the example in the table below.

Beyond a particular load, any product shows some degradation in performance. While it is easy to understand this phenomenon, it will be very difficult to do a performance test without knowing the degree of degradation with respect to load conditions. Massive degradation in performance beyond a degree is not acceptable by users. The performance values that are in acceptable limits when the load increases are denoted by a term called graceful performance degradation. A performance test conducted for a product needs to validate this graceful degradation also as one of the requirement.

Writing Test Cases

The next step involved in performance testing is writing test cases. A test case for performance testing should have the following details defined.

Performance test cases are repetitive in nature. These test cases are normally executed repeatedly for different values of parameters, different load conditions, different configurations, and so on. Hence, the details of what tests are to be repeated for what values should be part of the test case documentation.

Automating Performance Test Cases

Automation is an important step in the methodology for performance testing. Performance testing naturally lends itself to automation due to the following characteristics.

End-to-end automation is required for performance testing. Not only the steps of the test cases, but also the setup required for the test cases, setting different values to parameters, creating different load conditions, setting up and executing the steps for operations/transactions of competitive product, and so on have to be included as part of the automation script. While automating performance test cases, it is important to use standard tools and practices. Since some of the performance test cases involve comparison with the competitive product, the results need to be consistent, repeatable, and accurate due to the high degree of sensitivity involved.

Executing Performance Test Cases

Performance testing generally involves less effort for execution but more effort for planning, data collection, and analysis. As discussed earlier, 100% end-to-end automation is desirable for performance testing and if that is achieved, executing a performance test case may just mean invoking certain automated scripts. However, the most effort-consuming aspect in execution is usually data collection. Data corresponding to the following points needs to be collected while executing performance tests.

Another aspect involved in performance test execution is scenario testing. A set of transactions/operations that are usually performed by the user forms the scenario for performance testing. This particular testing is done to ensure whether the mix of operations/transactions concurrently by different users/machines meets the performance criteria. In real life, not all users perform the same operation all the time and hence these tests are performed.

What performance a product delivers for different configurations of hardware and network setup, is another aspect that needs to be included during execution. This requirement mandates the need for repeating the tests for different configurations. This is referred to as configuration performance tests. This test ensures that the performance of the product is compatible with different hardware, utilizing the special nature of those configurations and yielding the best performance possible. For a given configuration, the product has to give the best possible performance, and if the configuration is better, it has to get even better.

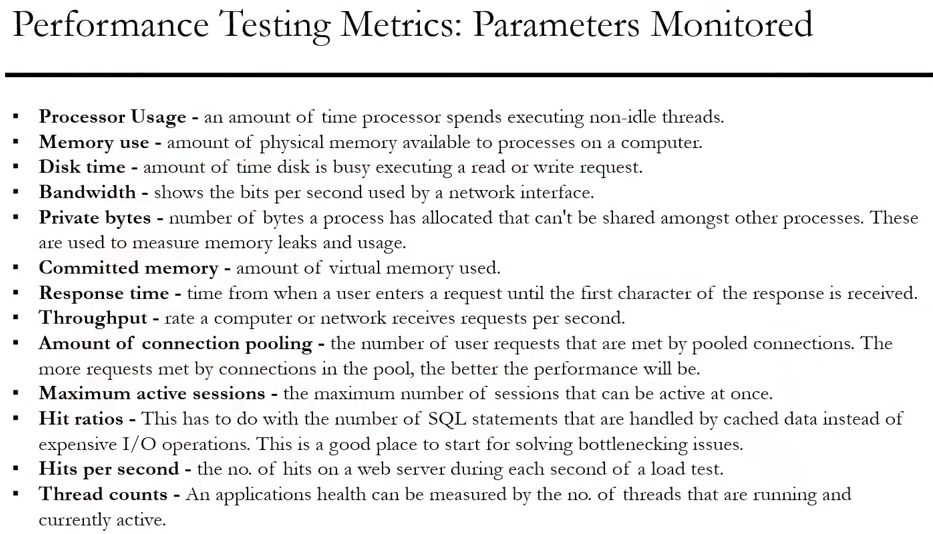

Once performance tests are executed and various data points are collected, the next step is to plot them. As explained earlier, performance

test cases are repeated for different configurations and different values of parameters. Hence, it makes sense to group them and plot them

in the form of graphs and charts. Plotting the data helps in making a quick analysis which would otherwise be difficult to do with only the

raw data. The figure below illustrates how performance data can be plotted.

Analyzing the Performance Test Results

Analyzing the performance test results require multi-dimensional thinking. This is the most complex part of performance testing where product knowledge, analytical thinking, and statistical background are all absolutely essential. Before analyzing the data, some calculations of data and organization of the data are required. The following come under this category.

When there are a set of performance numbers that came from multiple runs of the same test, there could be situations where in a few of the iterations, some errors were committed by the scripts, software, or a human. Taking such erroneous executions into account may not be appropriate and such values need to be ignored. The process of removing some unwanted values in a set is called noise removal. When some values are removed from the set, the mean and standard deviation needs to be re-calculated.

The majority of the server-client, Internet, and database applications store the data in a local high-speed buffer when a query is made. This enables them to present the data quickly when the same request is made again. This is called caching. The performance data need to be differentiated according to where the result is coming from - the server or the cache. The data points can be kept as two different data sets - one for cache and one coming from server. Keeping them as two different data sets enables the performance data to be extrapolated in future, based on the hit ratio expected in deployments.

Some time initiated activities of the product or background activities of the operating system and network may have an effect on the performance data. An example of one such activity is garbage collection/defragmentation in memory management of the operating system or a compiler. When such activities are initiated in the background, degradation in the performance may be observed. Finding out such background events and separating those data points and making an analysis would help in presenting the right performance data.

Once the data sets are organized (after appropriate noise removal and after appropriate refinement as mentioned above), the analysis of performance data is carried out to conclude the following.

Performance Tuning

Analyzing performance data helps in narrowing down the list of parameters that really impact the performance results and improving product performance. Once the parameters are narrowed down to a few, the performance test cases are repeated for different values of those parameters to further analyze their effect in getting better performance. This performance-tuning exercise needs a high degree of skill in identifying the list of parameters and their contribution to performance. Understanding each parameter and its impact on the product is not sufficient for performance tuning. The combination of parameters too cause changes in performance. The relationship among various parameters and their impact too becomes very important to performance tuning. There are two steps involved in getting the optimum mileage from performance tuning. They are

There are a set of parameters associated with the product where the administrators or users of the product can set different values to obtain optimum performance. Some of the common practices are providing a number of forked processes for performing parallel transactions, caching and memory size, creating background activities, deferring routine checks to a later point of time, providing better priority to a highly used operation/transaction, disabling low-priority operations, changing the sequence or clubbing a set of operations to suit the resource availability, and so on. Setting different values to these parameters enhances the product performance. The product parameters in isolation as well as in combination have an impact on product performance. Hence it is important to

Tuning the OS parameters is another step towards getting better performance. There are various sets of parameters provided by the operating system under different categories. Those values can be changed using the appropriate tools that come along with the operating system (for example, the Registry in MS-Windows can be edited using regedit.exe). These parameters in the operating system are grouped under different categories to explain their impact, as given below.

Performance Benchmarking

Performance benchmarking is about comparing the performance of product transactions with that of the competitors. No two products can have the same architecture, design, functionality, and code. The customers and types of deployments can also be different. Hence, it will be very difficult to compare two products on those aspects. End-user transactions/scenarios could be one approach for comparison. In general, an independent test team or an independent organization not related to the organizations of the products being compared does performance benchmarking. This does away with any bias in the test. The person doing the performance benchmarking needs to have the expertise in all the products being compared for the tests to be executed successfully. The steps involved in performance benchmarking are the following:

The results of performance benchmarking are published. There are two types of publications that are involved. One is an internal, confidential publication to product teams, containing all the three outcomes described above and the recommended set of actions. The positive outcomes of performance benchmarking are normally published as marketing collateral, which helps as a sales tool for the product. Also benchmarks conducted by independent organizations are published as audited benchmarks.

Capacity Planning

If performance tests are conducted for several configurations, the huge volume of data and analysis that is available can be used to predict the configurations needed for a particular set of transactions and load pattern. This reverse process is the objective of capacity planning. Performance configuration tests are conducted for different configurations and performance data are obtained. In capacity planning, the performance requirements and performance results are taken as input requirements and the configuration needed to satisfy that set of requirements are derived.

Capacity planning necessitates a clear understanding of the resource requirements for transactions/scenarios. Some transactions of the product associated with certain load conditions could be disk intensive, some could be CPU intensive, some of them could be network intensive, and some of them could be memory intensive. Some transactions may require a combination of these resources for performing better. This understanding of what resources are needed for each transaction is a prerequisite for capacity planning.

If capacity planning has to identify the right configuration for the transactions and particular load patterns, then the next question that arises is how to decide the load pattern. The load can be the actual requirement of the customer for immediate need (short term) or the requirements for the next few months (medium term) or for the next few years (long term). Since the load pattern changes according to future requirements, it is critical to consider those requirements during capacity planning. Capacity planning corresponding to short-, medium-, and long-term requirements are called

There are two techniques that play a major role in capacity planning. They are load balancing and high availability. Load balancing ensures that the multiple machines available are used equally to service the transactions. This ensures that by adding more machines, more load can be handled by the product. Machine clusters are used to ensure availability. In a cluster there are multiple machines with shared data so that in case one machine goes down, the transactions can be handled by another machine in the cluster. When doing capacity planning, both load balancing and availability factors are included to prescribe the desired configuration.

The majority of capacity planning exercises are only interpretations of data and extrapolation of the available information. A minor mistake in the analysis of performance results or in extrapolation may cause a deviation in expectations when the product is used in deployments. Moreover, capacity planning is based on performance test data generated in the test lab, which is only a simulated environment. In real-life deployment, there could be several other parameters that may impact product performance. As a result of these unforeseen reasons, apart from the skills mentioned earlier, experience is needed to know real-world data and usage patterns for the capacity planning exercise.

There are two types of tools that can be used for performance testing - functional performance tools and load tools. Functional performance tools help in recording and playing back the transactions and obtaining performance numbers. This test generally involves very few machines. Load testing tools simulate the load condition for performance testing without having to keep that many users or machines. The load testing tools simplify the complexities involved in creating the load and without such load tools it may be impossible to perform these kinds of tests. As was mentioned earlier, this is only a simulated load and real-life experience may vary from the simulation.

Here are some popular performance tools:

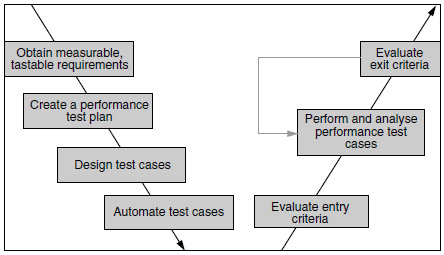

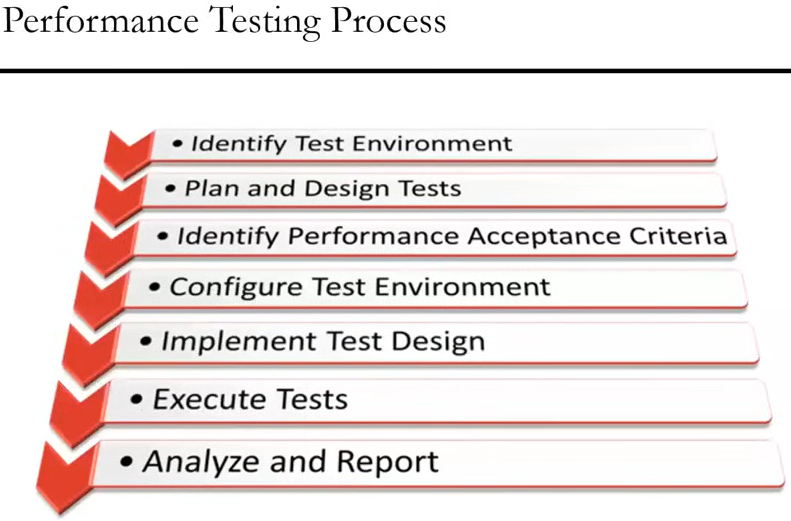

Performance testing follows the same process as any other testing type. The only difference is in getting more details and analysis. As

mentioned earlier, the effort involved in performance testing is more and tests are generally repeated several times. The increased effort

reflects in increased costs, as the resources needed for performance testing is quite high. A major challenge involved in performance

testing is getting the right process so that the effort can be minimized. A simple process for performance testing tries to address these

aspects in the below figures.

Ever-changing requirements for performance is a serious threat to the product as performance can only be improved marginally by fixing it in the code. As mentioned earlier, a majority of the performance issues require rework or changes in architecture and design. Hence, it is important to collect the requirements for performance earlier in the life cycle and address them, because changes to architecture and design late in the cycle are very expensive. While collecting requirements for performance testing, it is important to decide whether they are testable, that is, to ensure that the performance requirements are quantified and validated in an objective way. If so, the quantified expectation of performance is documented. Making the requirements testable and measurable is the first activity needed for the success of performance testing. The next step in the performance testing process is to create a performance test plan. This test plan needs to have the following details.

Entry and exit criteria play a major role in the process of performance test execution. At regular intervals during product development, the entry criteria are evaluated and the test is started if those criteria are met. There can be a separate set of criteria for each of the performance test cases. The entry criteria need to be evaluated at regular intervals since starting the tests early is counter-productive and starting late may mean that the performance objective is not met on time before the release. At the end of performance test execution, the product is evaluated to see whether it met all the exit criteria. Each of the process steps for the performance tests described above are critical because of the factors involved (that is, cost, effort, time, and effectiveness). Hence, keeping a strong process for performance testing provides a high return on investment.

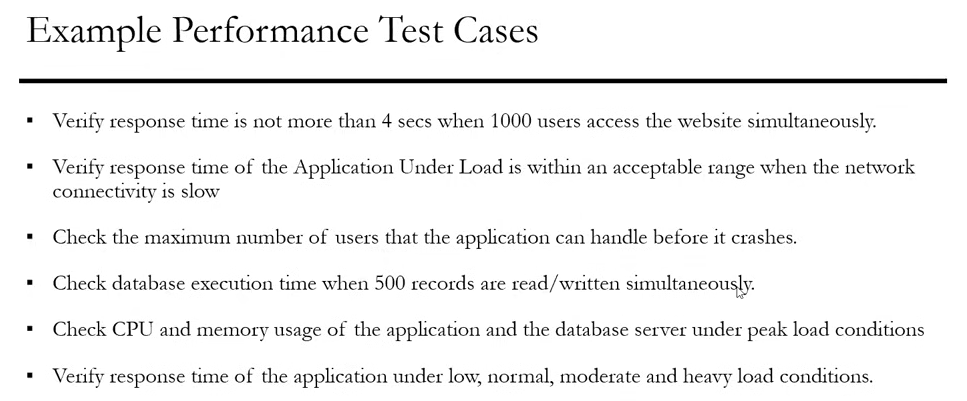

The below diagram depicts some performance testing test cases.

We will complete the following problems and exercises during lecture or during lab time.